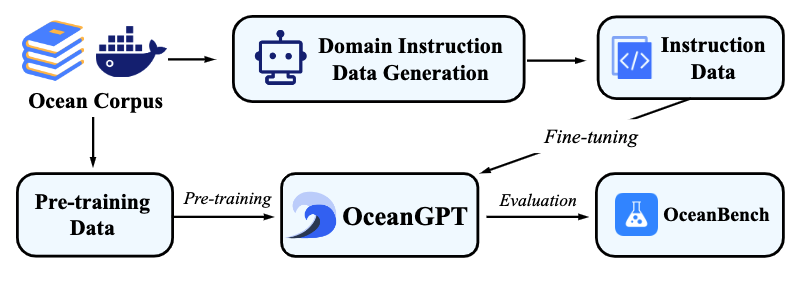

Covering approximately 71% of the Earth’s surface, the ocean plays a crucial role in global climate regulation, weather patterns, biodiversity, and human economic development. Ocean science research focuses on the natural characteristics of the ocean, its changing patterns, and the theories, methods, and applications related to the development and utilization of ocean resources. Therefore, we propose a large language model, OceanGPT, designed specifically for the ocean domain. It can handle various ocean science tasks, including Q&A and content generation. Additionally, we attempt to validate the potential of the large language model in simulating underwater robot operations, further exploring the realization of model-driven underwater embodied intelligence.

进化数据合成代理: 具体来说,代理采用两种协作策略:一是补充和扩展种子样本的背景知识,二是细化分析以增强和完善种子数据所蕴含的知识。

微调文献阅读代理: 先对大型语言模型进行微调,开发专门用于文献抽取的智能模型,使代理能够从浩如烟海的海洋文献中提取出高质量的句子。

质量保证审核代理: Predefining specific syntactic and semantic rules related to ocean science, constructing this agent through prompting to filter data and ensure the quality of generated data.

我们基于开源模型(如 Qwen、LLaMA、MiniCPM 等)和 DoInstruct 框架生成的指令训练了 OceanGPT。